Software Supply Chain Security: A Detailed Explanation

Software supply chain attacks cost businesses $45.8 billion globally in 2023 alone, and is projected...

Mar 18, 2024

With the rapid pace of technological evolution, ensuring security within the systems we operate and the software we deploy has never been more crucial. In the world of vulnerability management, we’ve moved from scanning Linux hosts to scrutinizing container images. However, are we looking for vulnerabilities in the right places?

The journey of vulnerability scanning began with simple scripts written to identify vulnerable software on Linux hosts. As the landscape expanded, these scanner scripts turned into projects that matured, evolving into robust tools capable of identifying vulnerabilities across multiple platforms. These tools were relying on package manager logs and on other heuristics to identify software that were installed on a Linux host. The software versions were then cross-referenced with different public vulnerability databases. With the rise of containerized applications, the focus shifted towards container images – packaging software into standardized units for development, shipment, and deployment. The paradigm did not change much, the idea was the same software installation scanning as before. The difference was that this time the file system where the scan was conducted was not local but stored in files in a container registry.

As developers integrate their code into a shared repository, images are scanned for vulnerabilities even before they’re pushed to registries. Depending on the policies in place, the image can be rejected in early phases which enables fast feedback to the developers.

However this approach has a problem, the scan results will only contain the vulnerabilities that are known at that point of time. Any vulnerabilities that surface later will be missed, therefore this can never be a single line of protection.

When applications are released, the images are stored in registries (like Docker Hub or private registries). Multiple registries support built-in scanning (e.g. Harbor, cloud vendor registries, Docker Hub or Quay.io), however many of them only scan when an image is pushed. If an image is used a year later from this registry, the scan results will be outdated. This is the same problem as with scanning in CI processes.

There are vendors (like Snyk for example) that support scanning registries and the results are kept up-to-date by recurrent rescanning. This is very convenient from a technical perspective, since registry protocol is standardized and this is a natural evolution from local scanners. On the other hand, the security team has a hard time understanding which image is used in a given registry and where to focus their efforts.

At the end of the day, these images are built to be used, therefore they are deployed in an operational environment. As a result, many vendors support scanning images even after deployment. Runtime environments regularly scan to check for any latent or new vulnerabilities.

Container image registries have become a focal point for vulnerability scanning for several reasons:

While this may be the easy way to do things, looking for something under the lamppost is probably not the best way to ensure your infrastructure’s security. Vulnerability scanning is more analogous to looking for a needle in a haystack. You can be sure that malicious actors are looking too.

(Read more on container image scanning).

It’s essential to revisit the core reason behind vulnerability scanning. At its heart, the goal is to identify and bolster potential weak points in our systems before they can be exploited. We do not have a reason to spend time on vulnerabilities in images that are not used in our systems!

A container registry contains sh*tload of images that are nearly never removed. ARMO’s research shows that images that are relatively new (not older than 180 days) have an average of 8 critical and 27 high vulnerabilities. These numbers translate to working hours and engineering effort. It starts pinpointing and prioritizing what needs to be fixed and eventually fixing them.

How do you ensure that your registry scan results are up-to-date and relevant for your running systems?!

While it can be useful to scan container images in registries and CI/CD processes, it should not distract us from monitoring vulnerabilities where it really matters: container images! It’s vital to ensure the software in the containers that are running —and any software in your ecosystem—is regularly vetted for vulnerabilities. It is the first and last line of defense in vulnerability management.

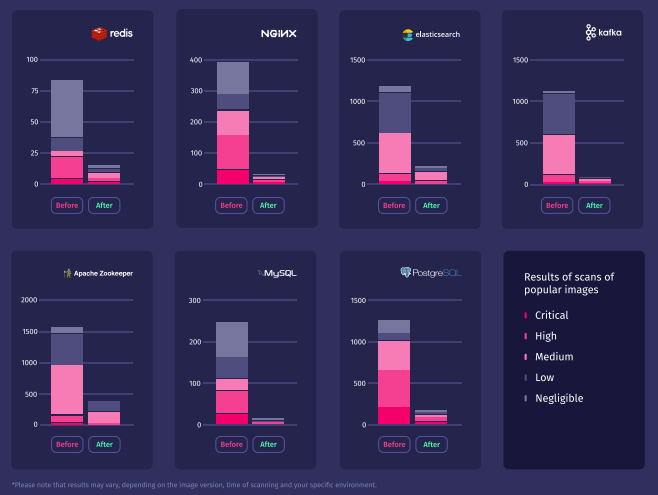

ARMO research from earlier this year (2023) shows that while public images can introduce many vulnerabilities into your infrastructure, things aren’t as bleak as they seem. Many of the vulnerabilities identified with naive scanners can be deprioritized when looking at things through the lens of the software that is actually running.

The Software Bill of Materials (SBOM) is an exhaustive list of components in a software version. Keeping track of your running SBOMs is analogous to having a clear inventory of the ingredients in a recipe. By regularly comparing your SBOMs against known vulnerabilities, you can gauge your exposure level and act promptly.

When a new vulnerability is discovered, it is crucial to give security teams an easy way to query whether the specific vulnerability or vulnerable component is deployed in one of the live systems. This way security teams can assess the situation quickly and prepare a response plan if needed.

Vulnerability scanning is a vital part of cybersecurity, but it’s important to focus on the right places. While it can be useful to scan container images in registries and CI/CD processes, the most important thing is to scan the software that is actually running in your production environment.

To do this, you need to keep track of your running SBOMs and vulnerabilities. This will give you a clear inventory of the components in your software, and you can then regularly compare it against known vulnerability databases to identify any potential risks.

When a new vulnerability is discovered, you should be able to quickly query your SBOMs to see if it is present in any of your running systems. This will allow you to assess the situation and prepare a response plan if needed. Even better if your system automatically notifies you about newly discovered vulnerabilities in your live systems.

By focusing on the right places for vulnerability scanning, you can help to protect your organization from cyberattacks.

The only runtime-driven, open-source first, cloud security platform:

Continuously minimizes cloud attack surface

Secures your registries, clusters and images

Protects your on-prem and cloud workloads

Software supply chain attacks cost businesses $45.8 billion globally in 2023 alone, and is projected...

Imagine this situation: you recently updated one of your infrastructure software components. A few weeks...

It is becoming increasingly important for organizations to manage Kubernetes security costs as they deploy,...