Introducing Full Workload Inventory Visibility in ARMO: See What’s Running, What It’s Doing, and How It’s Protected

At ARMO, our mission is to make Kubernetes security more accessible, actionable, and effective. That’s...

May 21, 2024

Securing Kubernetes environments is a continuous task, but the journey is fraught with challenges, particularly when addressing misconfigurations. This blog post explores the nuances of securing Kubernetes without disrupting applications, exploring the challenges, and proposing strategies for effective resolution.

Kubernetes misconfiguration scanners operate by analyzing manifest configurations and identifying potential security issues within pods, deployments, and other resources. These tools use predefined security criteria to validate configurations against best practices. They assess the adherence of Kubernetes setups to recommended security measures, assigning risk scores to detected issues. These scanners play a crucial role in enhancing the overall security posture of Kubernetes environments by proactively identifying and addressing misconfigurations. Users can leverage both built-in and custom policies to ensure compliance and robust security practices across Docker, Kubernetes, Terraform, and CloudFormation deployments.

Kubernetes environments are dynamic. Configurations must evolve to meet changing requirements and maintain the desired state of the infrastructure. Implementing security measures without causing disruptions requires real-time updates and an understanding of the application’s dependencies.

Relying solely on regular Kubernetes misconfiguration scanners can be risky. These tools often lack awareness of the specific behavior of the application workloads they are examining. As a result, their remediation advice, while based on security best practices, maybe overly generic. This creates a risk of unintended consequences that could disrupt the application. Therefore, it is essential for users to exercise caution and validate any suggested remediations to prevent inadvertent disruptions. This underscores the importance of combining automated scanning with contextual insights into application behavior. Application-aware scanners provide more accurate and secure remediation advice.

A hostPath volume mounts a file or directory from the host node’s filesystem into a Pod.

What is the challenge with removing the mounted host path?

If you remove the mountPath from your Kubernetes app, it will affect the volume mount configuration. The mountPath specifies the location within the container’s filesystem where the volume should be mounted. If you remove it, the volume will not be mounted to any specific path within the container, and the application might be unable to access the expected data or configuration stored in that volume.

If your Kubernetes app’s workload uses the privileged capability and you remove it, the application will lose certain elevated privileges within the container. Removing the privileged setting can enhance the security of your application by reducing the risk of exploitation or unintended actions. However, it’s crucial to understand the implications of the application’s functionality.

What is the challenge with removing the privileged capability?

Your application may require privileged access for specific tasks, and removing it without proper consideration may lead to unexpected behavior. Therefore, thoroughly test your application after making such security-related changes to ensure it functions as intended.

AllowPrivilegeEscalation determines if a process can get more powers than its parent. This setting influences whether the no_new_privs flag is set on the container process. It’s true when the container is either run as Privileged or has the CAP_SYS_ADMIN capability.

The best practice is to set AllowPrivilegeEscalation to false unless it is required

What is the challenge with setting the AllowPrivilegeEscalation to false?

If your Kubernetes app’s workload requires the AllowPrivilegeEscalation capability and you set it to false, it might impact the application’s functionality. This capability controls whether a process inside the container can gain more privileges than its parent process. If your workload depends on this privilege escalation, setting it to false could lead to issues. The process won’t be allowed to escalate privileges, potentially affecting the normal operation of the application. It’s crucial to understand the specific requirements of your workload and adjust this setting accordingly to ensure the expected functionality.

Service account tokens verify requests made by in-cluster processes to the Kubernetes API server. For workloads that don’t need to interact with the API server, it’s advisable to avoid automounting service account tokens by setting automountServiceAccountToken to false.

What is the challenge with setting the automountServiceAccountToken to false?

If your Kubernetes workload requires the automountServiceAccountToken capability and you set it to false, the service account token won’t be automatically mounted. This might affect processes that rely on the token for authentication with the Kubernetes API server. It’s important to ensure that your workload can function properly without the automatic mounting of the service account token if you choose to disable it.

An immutable root file system restricts applications from modifying their local disk. This restriction helps mitigate intrusions by preventing attackers from altering the file system or introducing unauthorized executables to the disk.

What is the challenge with setting the readOnlyRootFilesystem to true?

If you set the readOnlyRootFilesystem option to true for your container application, it means the container will not be able to make any changes to its root file system. Any attempt to write to the root file system will result in an error.

If your container application needs to write into the filesystem, it is recommended to mount secondary filesystems for specific directories where the application requires write access.

Capabilities allow specific named root actions without granting full root access. This approach represents a finer-grained permission model, where only necessary capabilities should be retained in a pod. Unnecessary capabilities should be removed because granting containers excess capabilities can potentially compromise their security, providing attackers with access to sensitive components.

What is the challenge with removing insecure certain capabilities?

The challenge with removing certain capabilities from the securityContext lies in ensuring that the application or process within the container can still function properly without those capabilities. Removing capabilities can sometimes lead to unexpected behavior or errors if the application relies on those capabilities to perform essential actions. Therefore, careful consideration and testing are required when modifying the securityContext to avoid disrupting the functionality of the containerized application.

hostPath volume mounts a directory or a file from the host to the container. Attackers who have permission to create a new container in the cluster may create one with a writable hostPath volume and gain persistence on the underlying host. For example, the latter can be achieved by creating a cron job on the host

What is the challenge with ensuring hostPath volume mounts are read-only?

Some applications running in Kubernetes Pods may require write access to certain files or directories within hostPath volumes. Enforcing strict read-only access may conflict with application requirements, necessitating careful consideration and potential application modifications.

“Smart remediation” is a security hardening approach that aims to minimize security risks without interfering with your application’s normal operation. It conducts a thorough analysis of your application to ensure that the recommended fixes are safe and won’t cause any problems with how your application works. Moreover, it provides a detailed explanation for each recommended fix, which outlines the specific changes suggested and the reasons behind them. This ensures that you have a clear understanding of the proposed adjustments.

In a Kubernetes context, leveraging eBPF assists with observing the application behavior. ARMO Platform, in turn, identifies the correct workload configuration and provides remediation recommendations that offer the best of both worlds. On the one hand, the recommendation is based on best practices drawn from well-known Kubernetes security frameworks. In addition, they account for the behavior of the application in question. This remediation advice doesn’t break the application.

ARMO Platform’s remediation view presents a detailed comparison of recommended fixes with your existing configurations. The suggestions primarily aim to resolve misconfigurations to improve compliance and security posture, according to well-known best practices. “Smart remediation” mode offers an effective way of securing your Kubernetes infrastructure, based on the behavior of your workload. It focuses on maintaining application integrity, and ensuring system stability while reducing unnecessary exposure.

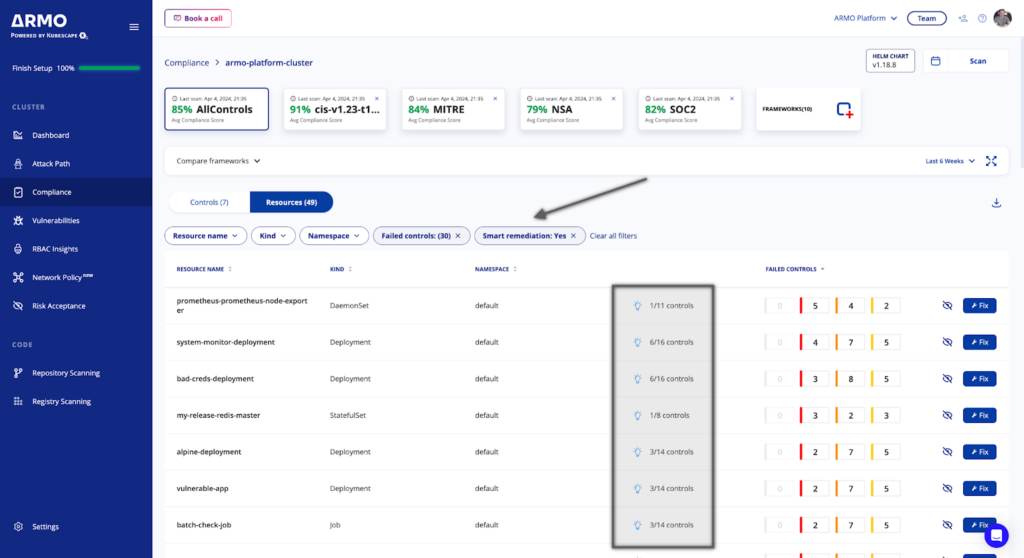

Controls ViewUsing the “Smart remediation” filter helps you quickly identify controls that offer smart remediation, so you can take action right away. These controls are denoted graphically with a light bulb icon.

To quickly identify the fixes you can confidently apply, switch to the Resources view, and enable the Smart remediation option. This will give you an indication for each of the failed resources, along with a counter that shows how many controls support Smart remediation out of the total failed controls.

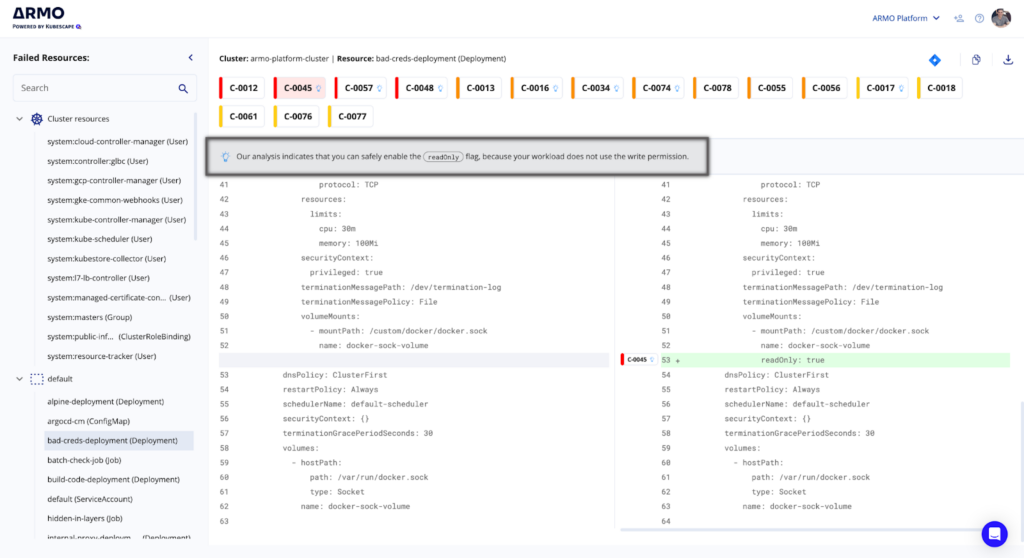

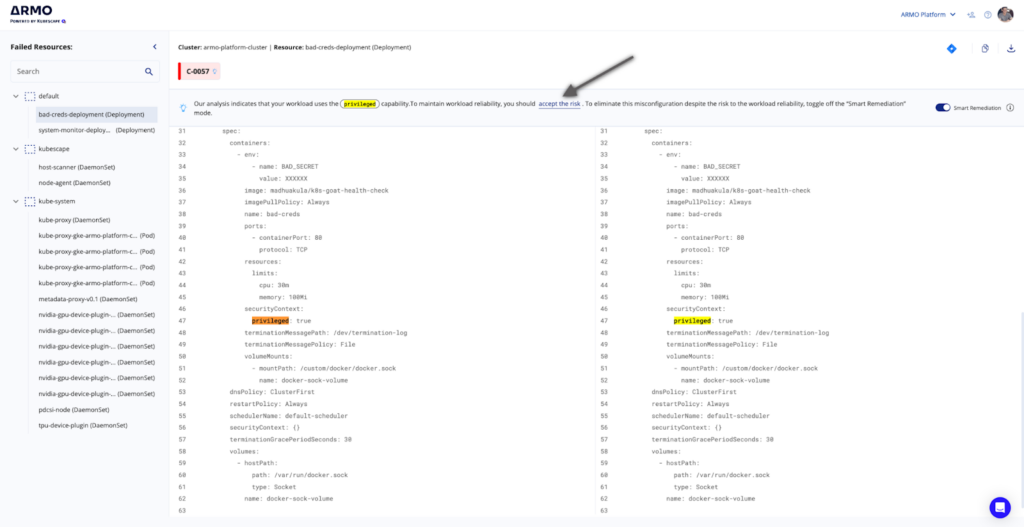

Once you filter by Smart remediation, click the Fix button to get context-aware remediation advice. Controls that support Smart remediation have the blue bulb indication. Once you click on it, you can access the details panel explaining why it’s safe to apply that remediation advice.

Example 1 – “Our analysis indicates that you can safely enable the readOnly flag because your workload does not use the write permission.”

Example 2 – “Our analysis indicates that your workload uses the privileged capability. To maintain workload reliability, you should accept the risk. To eliminate this misconfiguration despite the risk to the workload reliability, toggle off the “Smart remediation” mode.”

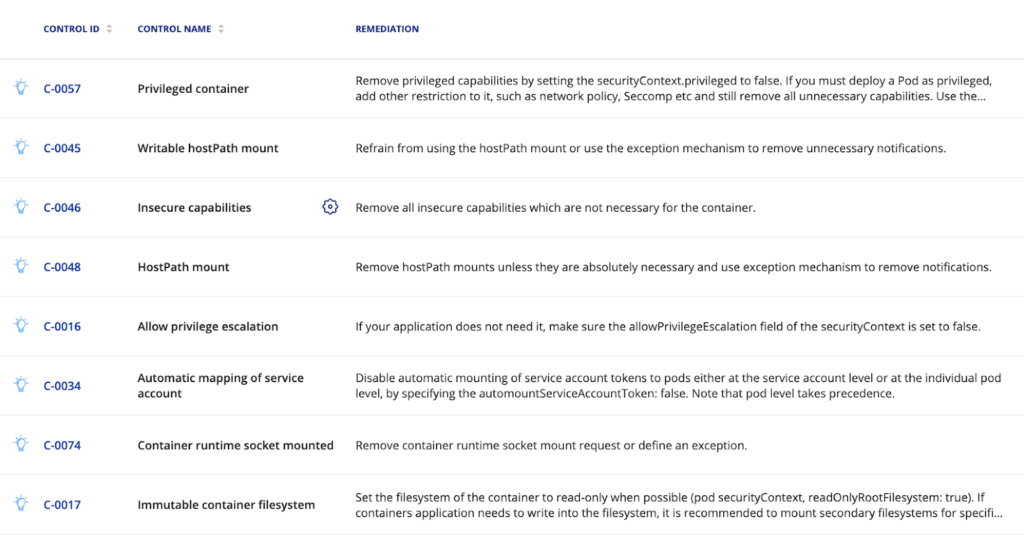

The following controls in ARMO Platform support smart remediation. Additional controls will be implemented in the future providing you the confidence to harden your workloads with zero disruption.

Securing Kubernetes without disrupting applications is challenging but not impossible. Understanding the intricacies of misconfigurations, adopting meticulous strategies, and learning from successful case studies, can help organizations better secure their Kubernetes environments without compromising operational continuity.

For those readers who would like to take this a step further and get remediation advice that won’t break applications, try ARMO Platform today.

The only runtime-driven, open-source first, cloud security platform:

Continuously minimizes cloud attack surface

Secures your registries, clusters and images

Protects your on-prem and cloud workloads

At ARMO, our mission is to make Kubernetes security more accessible, actionable, and effective. That’s...

Why most security findings are noise and and how to focus on what actually matters?...

We’ve been busy! Our latest summer updates introduce powerful new capabilities to help you detect,...