ARMO named in Gartner® Cool Vendors™ report

We are excited and honored to announce that we were selected as Gartner Cool Vendor...

Jan 16, 2024

In the era of cloud computing, Kubernetes has emerged as a true cornerstone of cloud-native technologies. It’s an orchestration powerhouse for application containers, automating their deployment, scaling, and operations across multiple clusters. Kubernetes isn’t just a buzzword; it’s a paradigm shift that underpins the scalability and agility of modern software.

While Kubernetes is often associated with the cloud, its adaptability for on-premises infrastructures is a testament to its versatility. Companies that prefer or require on-premises deployments for regulatory, security, or data sovereignty reasons increasingly turn to Kubernetes to leverage cloud-native capabilities within their controlled environments.

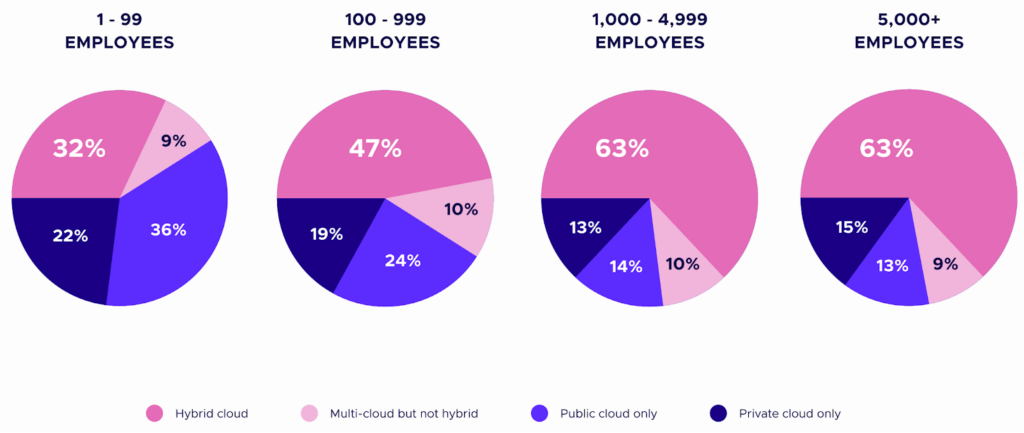

Kubernetes has proven to be more than capable of bridging the gap between traditional setups and the dynamic, micro-service architecture that cloud-native practices advocate for. The CNCF Annual Survey 2022 provides a glance into this trend, highlighting the growing adoption of Kubernetes in on-premises settings. For startup-level organizations, 22% use private cloud for their Kubernetes infrastructure, while for larger organizations, with more than 5,000 employees, this figure is 15%.

This blog post seeks to inform and equip technical practitioners with deep insights into securing Kubernetes in an on-premises context.

So, let’s embark on this journey together through Kubernetes, from cloud to core.

At its core, Kubernetes is a platform-agnostic container orchestration system. It’s designed to run anywhere—from your local development machine to high-scale production environments in the cloud and, crucially, on-premises data centers. On-premises Kubernetes is not a mere transplant of its cloud counterpart; it’s a specialized incarnation tailored to address the unique constraints and possibilities of an organization’s private infrastructure.

Kubernetes is a convergence point for traditional practices and cloud-native innovation in these environments. It offers on-premises infrastructures the flexibility to deploy applications with the speed and agility typically associated with the cloud while maintaining the governance, compliance, and security requirements that characterize on-premises solutions.

There are many benefits of adopting Kubernetes on-premises. It enables teams to:

These advantages are not merely theoretical. They represent tangible gains in productivity, cost-efficiency, and agility that can significantly impact an organization’s operational dynamics.

Adopting Kubernetes on-premises comes with a unique set of challenges.

Unlike cloud-hosted solutions, many on-premises setups are not directly managed by cloud vendors. This means that setting up a Kubernetes cluster often involves a more hands-on, vanilla approach that requires in-depth knowledge and manual configuration.

In contrast to cloud environments, on-premises infrastructures require manual hardware provisioning and management; this can be both time-consuming and resource-intensive.

Setting up networking for Kubernetes on-premises is more complex, frequently requiring deep integration with existing network infrastructure; organizations must also address challenges such as overlay networking and ingress control.

While ingress control is a common aspect across cloud-native environments, its implementation in on-premises setups poses specific challenges. In these environments, ingress must be carefully configured to work seamlessly with the existing network architecture, which often includes legacy systems and custom configurations.

Persistent storage in on-premises environments must be carefully managed to provide stateful applications with the robustness they need; this often necessitates integration with existing SAN/NAS solutions or distributed storage systems.

These challenges underscore the need for a nuanced approach to Kubernetes on-premises, one that respects existing investments in infrastructure and expertise while navigating toward a more agile and automated future.

Kubernetes is not a static entity; it’s a continuously evolving ecosystem that adapts to the needs of its users. To address the specific requirements of on-premises environments, Kubernetes has grown to support a variety of add-ons and integrations, including:

Through these capabilities and more, Kubernetes not only bridges the gap between on-premises and cloud environments but also enables a new paradigm of infrastructure management that is resilient and adaptable to change.

In the next section, we will explore the difficulties in managing Kubernetes in an on-premises setting, looking at the role of various Kubernetes providers and the shared responsibility model in these deployments.

Kubernetes has been adopted in on-premises data centers through the support of a growing ecosystem of service providers. While cloud service providers (CSPs) offer managed Kubernetes solutions, the on-premises landscape is enriched by specialized entities such as Rancher/SUSE, VMware vSphere, and Red Hat OpenShift. These providers extend Kubernetes’ reach beyond the cloud, bringing its benefits into the data center.

A multitude of smaller, cloud-agnostic companies such as Giant Swarm and Platform9 also contribute to this diversity, offering fully managed experiences tailored to on-premises needs. These solutions are designed to ease the operational burden, providing a Kubernetes experience that balances the control of on-premises with the convenience of the cloud.

Additionally, the major CSPs also provide managed offerings on-premises, namely, Google Cloud Anthos, Azure Arc, and Amazon EKS Anywhere. These solutions are very attractive for organizations that run on CSP-managed Kubernetes in the cloud and can extend it to on-premises, effectively creating a hybrid cloud solution. However, these will not be the best solutions for air-gapped environments.

Managed Kubernetes services are increasingly becoming the gateway for organizations to adopt Kubernetes on-premises without the complexity of setting up and maintaining the entire stack. These services typically provide:

Securing an on-premises Kubernetes environment entails protecting various system components. Let’s review the crucial areas that need strict security measures and the best practices to safeguard your Kubernetes infrastructure.

The etcd database is the heart of a Kubernetes cluster, storing all of the system and service states. Securing etcd is not optional; it’s imperative. Encryption of etcd data at rest prevents unauthorized access to this sensitive information and is a fundamental security practice.

The Kubernetes API Server acts as the front door to your cluster, making its security configuration a top priority.

The kubelet serves as the primary node agent. It manages the state of each node, making sure your containers are running properly.

Securing communication between Kubernetes components is crucial to prevent man-in-the-middle attacks and unauthorized data access.

Components (API server, scheduler, controller manager, etc.) must communicate over secure channels to ensure the integrity and confidentiality of their interactions.

Let’s extend our discussion to advanced security measures that can further bolster the security posture of an on-premises Kubernetes cluster. We will discuss implementing robust TLS protocols, key rotation practices, and maintaining high-security standards in a dynamic environment.

A dynamic environment like Kubernetes, where containers are constantly created and destroyed, requires continuous vigilance to maintain high-security standards.

Organizations must see Kubernetes as a strategic asset. On-premises users benefit from the agility, scalability, and resilience that Kubernetes offers, enabling them to compete in a digital economy while meeting stringent security and compliance requirements. It facilitates a cloud-native approach that is seamlessly integrated with existing infrastructure, bridging the gap between the old and the new, the traditional and the innovative.

Embracing Kubernetes on-premises can be transformative for organizations willing to invest in its potential. With the right approach, tools, and mindset, Kubernetes can drive your on-premises infrastructure into the future of cloud-native computing.

For those ready to take their on-prem installations to the cloud-native world, don’t forget the importance of securing their Kubernetes infrastrcuture. ARMO Platform offers an end-to-end Kubernetes security solution that cuts through the noise and brings you the insights and guidance you need, whether on-prem or in the cloud. Join the ranks of security-minded enterprises and start your ARMO experience today.

We are excited and honored to announce that we were selected as Gartner Cool Vendor...

Transparency in vulnerability disclosure plays a crucial role in effective risk management, regardless of software...

Learn about Kubernetes compliance challenges, consequences of non-compliance, and get guidance on maintaining a secure...