Kubernetes RBAC: Deep Dive into Security and Best Practices

This guide explores the challenges of RBAC implementation, best practices for managing RBAC in Kubernetes,...

Mar 21, 2022

Kubernetes Ingress is one of today’s most important Kubernetes resources. First introduced in 2015, it achieved GA status in 2020. Its goal is to simplify and secure the routing mechanism of incoming traffic to your defined services.

Ingress allows you to expose HTTP and HTTPS from outside the cluster to your services within the cluster by leveraging traffic routing rules you define while creating the Ingress. Without Ingress, you would need to expose each service separately, most likely with a separate load balancer. As you can imagine, this would be cumbersome and expensive as your application scales and the number of services increases.

At the end of the day, you still need a load balancer or node port since you need to expose the Ingress outside of the cluster. However, you immediately benefit from reducing the number of required load balancers to, in many cases, just a single load balancer.

Moreover, when aiming to secure the traffic using SSL, the configuration can be made at different levels; for example, at the application level or load-balancer level. This could lead to disparity in the way you implement SSLs, a disparity that becomes more prominent as your application scales, resulting in increased overhead. With Ingress, SSL can be configured in the Ingress resource itself, reducing the required management overhead. These configurations are defined within the cluster and allow the configuration to exist as your other configuration files for your Kubernetes application.

Want to learn how to get started with Kubernetes Ingress? Read on.

Setting up Ingress involves two phases: setting up the Ingress Controller and defining Ingress resources. This is because Ingress is implemented using supported routing solutions, such as NGINX or Traefik, which then leverage routing and security configs specified in your Ingress resource. Kubernetes documentation provides a list of supported Ingress controllers, and you may even consider a hybrid approach.

This phase involves the actual deployment of the Ingress controller. What exactly you do here depends on the controller you use. In this post, we will use the NGINX Ingress Controller. The deployment process itself will also differ according to your Kubernetes cluster setup. For example, the deployment process when using Kubernetes clusters with AWS will differ from when using them with bare metal.

For the purpose of this article, we will take one of the simpler routes to demonstrate how an NGINX controller can be installed and set up by leveraging minikube. Once minikube has been installed, simply enable the NGINX controller with the command below:

minikube addons enable ingress

The advantage of leveraging minikube is that when you use the “enable” command, minikube provisions the following for you:

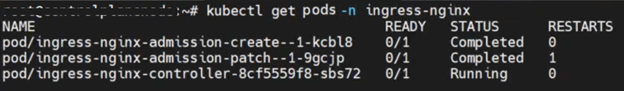

After executing the “enable” command, you can check on your NGINX Ingress Controller by running the following command:

kubectl get pods -n ingress-nginx

You should now be able to see a similar output, as shown in the following image.

Such output will indicate that your NGINX Ingress Controller is running.

When setting up and actually deploying your controller, there are a few things you should keep in mind. First, be aware of the version of minikube you are using. For this article, we used minikube v1.19. An older version may require you to set up the config file and default service manually. Note that there are many ways to set up and deploy your NGINX controller, depending on how you plan to run your Kubernetes applications.

After you have successfully configured your Ingress controller, you can finally define your Ingress resources. Similar to defining other Kubernetes resources, you can define the Ingress resource in a YAML file.

The latest apiVersion for the Kind Ingress resource is currently networking.k8s.io/v1. It is recommended to always use the latest stable version that is available. Therefore, our basic ingress.yaml file, with two paths is as follows:

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: armo-ingress-example spec: ingressClassName: nginx rules: - host: armoexample.com http: paths: - path: /kubernetes pathType: Prefix backend: service: name: armoservice1 port: number: 80 - path: /kubernetes/security/ pathType: Prefix backend: service: name: armoservice2 port: number: 80

The main component of an Ingress resource is the rules that you define under the resource ‘spec’. After all, that is the whole point of the Ingress controller: to route and secure traffic according to the rules defined. In the example YAML file above, you see the routing of two paths:

/kubernetes and /kubernetes/security/ to armoservice1 and armoservice2.

Another thing to notice is the ‘pathType’ field. This allows you to define how your Ingress rules are going to read the URL paths. For example, an ‘Exact’ value would match the URL as defined, taking into account case sensitivity. Refer to the documentation to learn about the other path types and other fields you’ll need to configure to tailor your Ingress resource for your requirements. Finally, after defining your Ingress resource, all you need to do is deploy your application, along with all the services and Ingress components you have built.

As already mentioned earlier, there are several ways to set up your Ingress. You should really consider what you want to achieve with Ingress, and a lot of this consideration goes into picking the right Ingress controller. The issues Ingress solves for you are:

All the major available Ingress controllers solve all these basic issues, but there are also some overheads to consider while setting up your Ingress.

Considering what you can do with Ingress, it is easy to think of an Ingress controller as an API gateway, reducing the need for a separate API gateway resource in your cloud architecture. When selecting an Ingress controller, consider what functionality it provides to achieve API gateway responsibilities such as TLS termination and client authentication.

One of the greatest benefits of Ingress is being able to secure the traffic of your application. In the Ingress resource, you can define your TLS private key and certificates by leveraging the Kubernetes Secrets resource, instead of directly defining your TLS details in the Ingress resource.

There are three types of Ingress configurations, and choosing the right one depends on how you see east-west and north-south traffic being managed within your application:

Ingress is a very powerful component of any Kubernetes application. With the ability to reduce the number of load balancers required to standardize the way security can be considered, Ingress should definitely be considered when working with Kubernetes. However, getting started with Ingress, just like any other Kubernetes concept, may be overwhelming.

The only runtime-driven, open-source first, cloud security platform:

Continuously minimizes cloud attack surface

Secures your registries, clusters and images

Protects your on-prem and cloud workloads

This guide explores the challenges of RBAC implementation, best practices for managing RBAC in Kubernetes,...

Role-Based Access Control (RBAC) is important for managing permissions in Kubernetes environments, ensuring that users...

In the dynamic world of Kubernetes, container orchestration is just the tip of the iceberg....